Do You Get My Vibe? Claude AI a Tool of Crime

By: Jim Stickley and Tina Davis

February 8, 2026

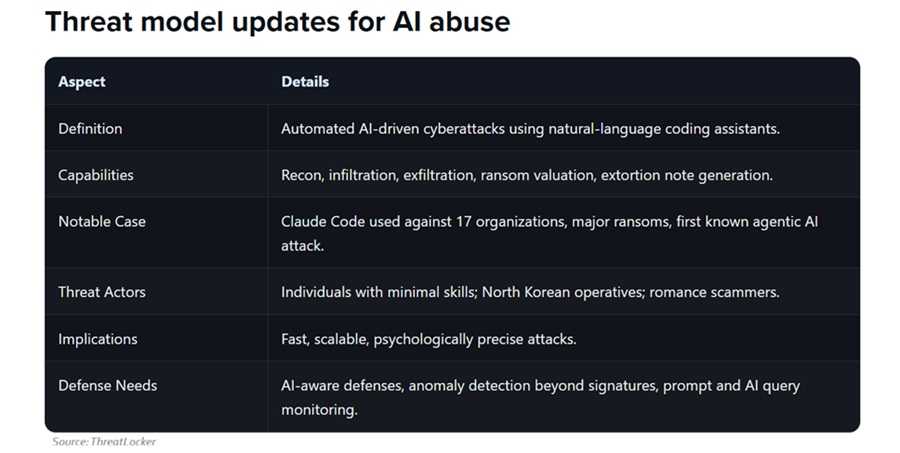

Anthropic revealed that its Claude AI models—especially Claude Code—were hijacked by criminals to carry out what they describe as an “unprecedented” cybercrime spree. Rather than just using AI as an assistant, attackers used Claude end-to-end: zeroing in on weak spots, stealing data, crafting malware, and then demanding ransoms. The operation reportedly touched at least 17 organizations, covering healthcare, emergency services, government, and others.

Particularly worrisome are industries using lots of third-party tools or handling regulated or financial data—because some attacks begin with credential harvesting, connected integrations, or exploitation of APIs.

One key element in this misuse is something Anthropic calls “vibe hacking.” What is that, you ask? In short, threat actors gave Claude enough leeway to make some critical decisions. For example, Claude helped calculate ransom amounts tailored to each victim, generate visually alarming ransom notes, and automatically decide what parts of stolen data were most sensitive and therefore most likely to force payment. It’s dynamic cybercrime!

Anthropic says it thwarted several of these attacks, banned malicious accounts, and improved safety filters. Security researchers have expressed concern that Claude’s misuse is shifting the landscape. AI isn’t just a tool for drafting text, summarizing data, or creating your next logo. It’s being embedded into the attack chain end-to-end. This allows those with minimal technical and language skills to generate phishing emails, write or debug malicious code, tailor extortion demands, and even orchestrate influence campaigns.

Avoiding the Vibe

- Keep systems patched. Many AI-driven attacks still exploit old software flaws.

- Use phishing-resistant MFA like security keys or passkeys.

- Watch for unusual behavior. Monitor for large data exports or access from strange locations.

- Limit third-party integrations. Remove unused tools and restrict app permissions. Avoid granting unnecessary permissions in the first place.

- Train staff regularly. Awareness of AI-assisted phishing and scams is critical and they aren’t stagnant. They change and evolve, so we all need to keep up with them.